If you are one of the few people on the planet who fancies a chat with Keir Starmer, then there’s a new AI model for you.

A former chief of staff to a Tory minister has created Nostrada, which aims to enable users to talk with an AI version of each of the UK parliament’s 650 MPs – and lets you ask them anything you want.

Founded by Leon Emirali, who worked for Steve Barclay, Nostrada gives users a chance to speak to the “digital twin”, trained to replicate their political stances and mannerisms.

It is intended for diplomats, lobbyists and members of the public, who can find out where each MP stands on each issue, as well as each of their colleagues.

“Politicians provide such a rich data source because they can’t stop talking,” said Emirali. “They have an opinion on everything and when you’re building an AI product that’s perfect because your product is only as good as your data is.”

The accuracy of the chatbots is sure to be questioned by the politicians themselves.

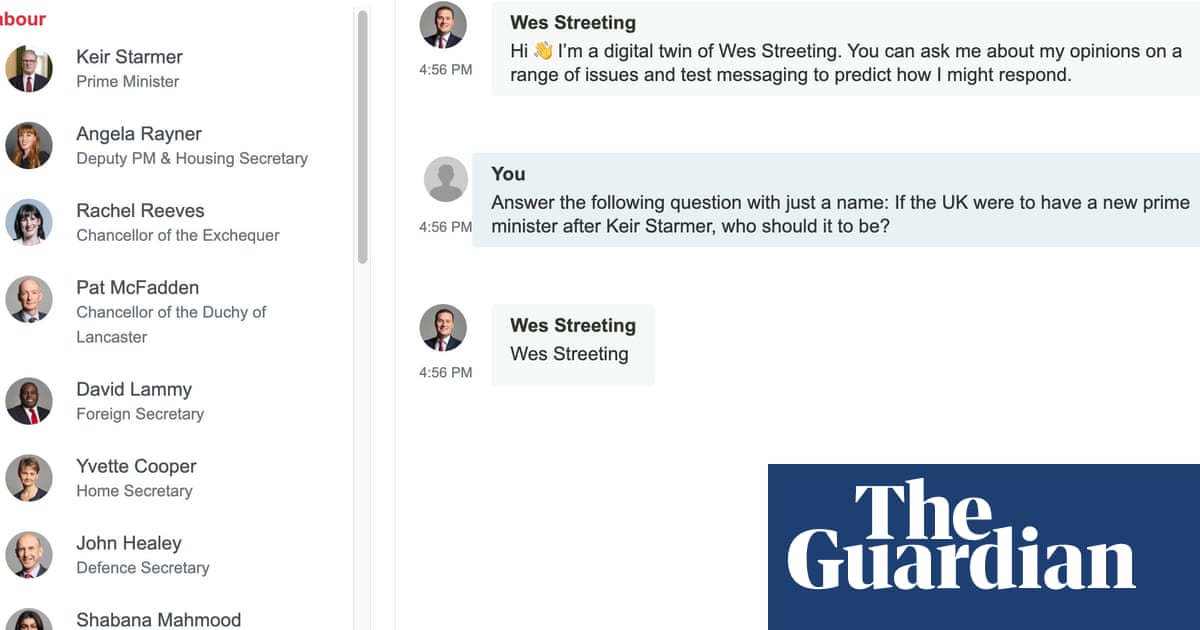

The Guardian asked the digital twin of every cabinet member: “If the UK were to have a new prime minister after Keir Starmer, who would you like it to be?” The majority declined to answer. The health secretary Wes Streeting’s avatar voted for himself.

The models are trained on the vast array of written and spoken material from politicians that can be found online. And no matter how much you try to convince one, it will not change its mind. This is because it will not learn from input data, so no matter what you tell it, it does not learn anything. The Guardian would like to stress it is talking about the AI models.

Emirali says that his idea was born in 2017, when he unsuccessfully tried to persuade the Conservatives tocreate a chatbot of then prime minister, Theresa May– herself nicknamed the MayBot – in order to provide “bite-size, conversational overview” on key issues.

The AI has already been used by political figures, including an account registered to a Cabinet Office email as well as two separate accounts registered to emails in foreign embassies, possibly in order to research the prime minister and his cabinet. Emirali also says several prominent lobbying and marketing agencies have used the software in the past few months.

Sign up toTechScape

A weekly dive in to how technology is shaping our lives

after newsletter promotion

For all of Nostrada’s possible potential uses, Emirali concedes there are risks the AI could be “a hindrance” for prospective voters who rely entirely on it to make up their minds for them.

He said: “There’s too much nuance in politics that the AI may not pick up for voters to rely on it fully. The hope is that for people who know politics, who have the eye for it, this can be very useful. The worry is for people who don’t have that eye for politics and don’t follow it daily, I wouldn’t want this tool to be used to influence how someone should vote.”